Difference between revisions of "Music 253"

(→EsAC) |

(→EsAC) |

||

| Line 143: | Line 143: | ||

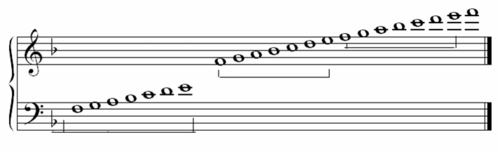

[[File:Essen_gamut.png|500px|thumb|center|The EsAC three-octave "gamut". Pitches are represented by the integers 1..7. The central octave is unsigned. Pitches in the lower octave are preceded by a minus sign (-); pitches in the upper octave by a plus sign (+).]] | [[File:Essen_gamut.png|500px|thumb|center|The EsAC three-octave "gamut". Pitches are represented by the integers 1..7. The central octave is unsigned. Pitches in the lower octave are preceded by a minus sign (-); pitches in the upper octave by a plus sign (+).]] | ||

| − | A | + | A smallest duration, given in header (preliminary) information, is taken as the default value. The increments of longer note values are indicated by underline characters. Sample files are shown in thus handout of [[Media:EsAC-Samples.PDF| EsAC samples]]. Further details are given in [http://www.ccarh.org/publications/books/beyondmidi/ <i>Beyond MIDI</i>], Ch. 24. |

=====Plaine and Easie===== | =====Plaine and Easie===== | ||

Revision as of 00:10, 7 August 2012

Old front page for Music 253: http://www.ccarh.org/courses/253

Contents

- 1 What is Musical Information?

- 2 Basics

- 3 Schemes of Representation

- 4 Applications of Musical Information

- 5 References

What is Musical Information?

Musical information (also called musical informatics) is a body of information used to specify the content of a musical work. There is no single method of representing musical content. Many digital systems of musical information have evolved since the 1950s, when the earliest efforts to generate music by computer were made. In the present day several branches of musical informatics exist. These support applications concerned mainly with sound, mainly with graphical notation, or mainly with analysis.

Musical representation generally refers to a broader body of knowledge with a longer history, spanning both digital and non-digital methods of describing the nature and content of musical material. The syllables do-re-mi (identifying the first three notes of an ascending scale) can be said to represent the beginning of a scale. Unlike graphical notation, which indicates exact pitch, this representation scheme is moveable. It pertains to the first three notes of any ascending scale, irrespective of its pitch.

Basics

Parameters of Musical Information

Pitch

Two kinds of information--pitch and duration--are pre-eminent, for without pitch there is no sound, but pitch without duration has no substance. Trained musicians develop a very refined sense of pitch. Systems for representing pitch span a wide range of levels of specificity. Simple discrimination between ascending and descending pitch movements meet the needs of many young children, while elaborate systems of microtonality exist in some cultures.

There are many graduated continua--diatonic, chromatic, and enharmonic scales--for describing pitch. Absolute measurements such as frequency can be used to describe pitch, but for the purposes of notation and analysis other nomenclature is used to relate a given pitch to its particular musical context.

Duration

Duration has contrasting features: how long a single note lasts is entirely relative to the rhythmic context in which it exists. Prior to the development of the Musical Instrumental Digital Interface (MIDI) in the 1980s the metronome was the only widely used tool to calibrate the pace of music (its tempo). MIDI provides a method of calibration that facilitates capturing very slight differences of the execution in order to "record" performance in a temporally precise way. In most schemes of music representation values are far less precise. Recent psychological studies have demonstrated that while human expectations of pitch are precise, a single piece of music accommodates widely discrepant executions of rhythm. People can be conditioned to perform music in a rote manner, with little variation from one performance to another, but deviation from a regular beat is normal.

Other Dimensions of Musical Information

Many other dimensions of musical information exist. Gestural information registers the things a performer may do to execute a work. These could include articulation marks for string instruments; finger numbers and pedal marks for piano playing; breath marks for singers and wind players; heel-toe indicators for organists, and so forth. Some attributes of music that are commonly discussed, such as accent, are implied by notation but are not actually present in the fabric of the musical work. They occur only in its execution.

Domains of Musical Information

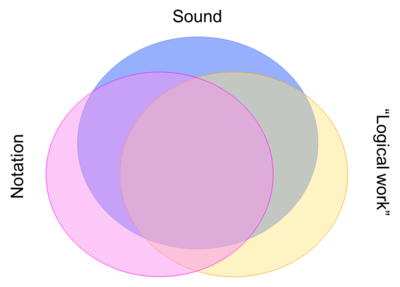

Applications of musical information (or data) are said to exist in various domains. These domains partially overlap in the information they contain, but each contains domain-specific features that cannot be said to belong to the work as a whole. The three most important domains are those of graphics (notation), sound, and that content not dependent on either (usually called the "logical" work).

The Graphical (Notational) Domain

The notation domain requires extensive information about placement on a page or screen.

The Sound Domain

The sound domain requires information about musical timbre. In the notation domain a dynamic change may be represented by a single symbol, but in the sound domain a dynamic change will affect the parameters of a series of notes.

The Logical Domain

The logical domain of music representation is somewhat elusive, for it consists of the parameters required to fully represent a musical work without specific information that facilitates either score- or screen-presentation or sounding artifacts. Pitch and duration remain fundamental, but eighth notes (for example) may lack beams (a property of printing). However, beams can play an important role in cognition (e.g., in recognizing groupings). For this reason the parameters and attributes encoded in a "logical" file are somewhat negotiable.

Systems of Musical Information

Domain differences favor domain-specific approaches: it is more practical to develop and employ code that is task- or repertory-specific than to disentangle large numbers of variables addressing multiple domains in a "complete" representation system.

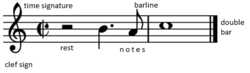

Notation-oriented schemes

Notation programs favor the graphical domain because notated music is only comprehensible if the spatial position of every object is correct and if the visual relationships between object are precise. Common Western Notation (CMN) is full of graphical conventions that aid vision and comprehension but have little to do with sound. Beamed groups of notes facilitate rapid comprehension. Barlines help users maintain a steady meter.

Sound-oriented schemes

Time is a fundamental variable in sound-oriented approaches to symbolic representation. The steady beat implied for four adjacent quarter notes rarely translates into four time-intervals of a single precise measurement. Repp has shown that deviation from a metronomic beat is the human norm. Our ears resist a completely steady beat, although synchonization with other parts is essential to performance by groups.

Other important qualities of sounding music are timbre, dynamics, and tempo. Timbre defines the quality of the sound produced. Many factors underlie differences in timbre. Dynamics refer to levels of volume. Tempo refers to the speed of the music. Relative change of both dynamics and tempo are both important in engaging a listener's attention.

Data Interchange between Domains and Schemes

The interchange of musical data is significantly more complex than that of text. Musical data is doubly multi-dimensional. That is, pitch and duration parameters describe a two-dimensional space.

Additionally, most music is polyphonic: it consists of more than one voice sounding simultaneously. So taking all fundamental parameters into account, a musical score is an array of two (or more) dimensional arrays.

There is no default format for musical data (analagous, let us say, to ASCII for text in the Roman alphabet). This absence owes largely to the great range of musical styles and methods of production that exist throughout the world. No one scheme is favorable to all situations. Data interchange inevitably involves making sacrifices. In the world of text applications Unicode facilitates interchange between Roman and non-Roman character sets (Cyrillic, Arabic et al.) for alphabets that are phonetic.

MusicXML

Within the domain of musical notation MusicXML is currently the most widely use scheme for data interchange, particularly for commercial software programs such as Finale and Sibelius. A particular strength of MusicXML is its ability to convert between part-based and score-based codes, owing to its having been modeled bilaterally on MuseData, for which the raw input is part-based, and the Humdrum kern format, which is score-based.

Audio interchange

Many schemes for audio interchange exist in the world of sound applications. Among them are these:

- AIFF has been closely associated since 1988 with Apple computers.

- RIFF has been closely associated since 1991 with Microsoft software.

Both schemes are based on the concept of "chunked" data. In sound files chunks segregate data records according to their purpose. Some records contain header information or metadata; some contain machine-specific code; most define the content of the work.

- MP3 files further a practical interest in processing audio files, which can be exorbitant in size. Compression techniques speed processing but may sacrifice some of the precision in incremental usage.

- MPEG, another compression scheme, is concerned with the synchronization of audio and video compression. A large number of extensions adapt it for other uses.

The Music Encoding Initiative (MEI)

The Music Encoding Initiative seeks to provide non-commercial standards for the generalized encoding of materials containing music such that the content of the original materials is preserved as literally as possible, while the preparation of new renderings is not inhibited. As a predecessor of both HTML and XML, Standard Generalized Markup Language can be viewed as the primogenitor of projects such as the Text Encoding Initiative (TEI) and MEI. MEI is an XML-based approach suited to the markup of both musical and partially musical materials (i.e., texts with interpolations of music).

Cross-domain interchange

Attempts have been made to develop cross-domain interchange schemes, but to date none of have been successful. The default "cross-domain" application is MIDI, to which we devote attention below.

Data Acquisition (Input)

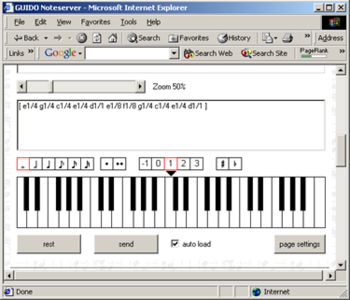

For notation

Many systems for the acquisition of musical data exist. The two most popular today are (1) capture from a musical keyboard (via MIDI) and (2) optical recognition (scanning). Both provide incomplete data for notational applications: keyboard capture misses all the features specific to notation (i.e., not shared by sound); optical recognition is an imperfect science that can miss arbitrary symbols. Commercial programs for optical recognition tend to focus of providing MIDI data rather than a full complement of notational features. The details of what may be missed, or misinterpreted by the software, vary with the program. In either of the above cases serious users must modify files by hand.

Hand-correction of automatically acquired materials can be very costly in terms of time. For some repertories complete encoding by hand may save time and produce a more professional result, but outcomes vary significantly with software, user familiarity with the program, and the quantity of detail beyond pitch and duration that is required.

Statistical measures give little guidance on the efficiency of various input methods. A claimed 90% accuracy rate means little if barlines are in the wrong place, clef changes have been ignored, text underlay is required, and so forth.

Visual prompts for data entry to a virtual keyboard may help novices come to grips with the complexity of musical data. Musicians are accustomed to grasping from conventional notation several features--pitch, duration, articulation--from one reading pass because a musical note combines information about these features in one symbol. However, computers cannot reliably decode these synthetic lumps of information.

For sound

MIDI is the preferred way to capture sound data to a file that can be processed for diverse purposes. MIDI provides a convenient way to store sound data for further use and for sharing. It is frequently used to generate notation and is useful for rough sketches of a work. Its capabilities for refined notation are limited, particularly by its inarticulate represent of pitch.

For logical information

Logical information is usually encoded by hand or acquired by data translation from another format. For simple repertories and short pieces logical scores may be acquired from MIDI data, but if the intended use is analytical verification of content is so important that manual data entry may be more efficient.

Data Output

Output is the desired result of all schemes of representation. When output does not match a user's expectations it can be difficult to attribute cause. Errors can result both from flawed or incomplete data and from inconsistencies or lapses in the processing software.

From notation software

Because of the great diversity musical repertories and consequent variability in notational requirements, no software for musical notation is either perfect or complete. Hand correction is almost always necessary for scores of professional quality. Lapses are greatest for repertories that do not entirely fall within the bounds of Common Western Notation (European-style music composed between c. 1650 and 1950) or which have an exceptionally large number of symbols per page (much of the music of the later nineteenth century, such as that of Verdi and Tchaikovsky). Even some popular repertories are difficult to reproduce (usually because of unconventional requirements) with complete accuracy.

From sound-generating software

The ear is a quicker judge than the eye but also a less forgiving one. Errors in sound files are usually conspicuous, but editing files can be difficult, but this depends mainly on the graphical user interface provided by the sound software.

From logical-data files

Logical-data files are difficult to verify for accuracy and completeness unless they can be channeled either to sound or notational output of some kind. This means that the results of analytical routines run on them can be flawed by the data itself, though the algorithms used in processing must be examined as well.

Schemes of Representation

Notation Codes

Schemes for representing music for notation and display range from lists of codes for the production of musical symbols such as CMN to extensible languages in which symbols are interrelated and may be prioritized in processing. Some notation codes are essentially printing languages in their own right but may lose any sense of musical logic in their assembly.

Some users judge notation codes by the aesthetic qualities of the output. Professional musicians tend to prefer notation that is properly spaced and in which the proportional sizes of objects clarify meaning and facilitate easy reading. For this reason notation software is heavily laden with spacing algorithms and provides the user with many utilities to altering automatic placement and size.

Musical notation is not an enclosed universe. In the West it has been in development for a millennium. In many parts of the Third World cultural values discourage the use of written music other than in clandestine circles of students of one particular musical practice. Contemporary composers constantly stretch the limits of Conventional Western Notation, guaranteeing that graphical notation will continue to evolve.

A fundamental variable of approaches to notation is that of the conceptual organization of a musical work, coupled with the order in which elements are presented. Scores can be imagined as consisting of parts, measures, notes, pages, sections, movements, and so forth. Only one of these can be taken as fundamental; other elements must be related to it.

Early representation systems encoded works page by page. More recent systems lay out a score

Typesetting Codes: CMN, MusiXTeX, and Lilypond

The close orientation towards plotters and printers is evident in many notation codes, such as CMN, an open-source description language. An online manual for this lisp-based representation, predominately by Bill Schottstaedt, is available at Stanford.

Also online is a manual describing MusiXTeX, a music-printing system based on TeX, a typesetting language widely used in scientific publishing. MusiXTeX is one of several dialects of music printing in the TeX universe.

The evolution of typesetting from metal through photo-offset to modern digital preparation and printing necessarily changed approaches to music printing. Lilypond, a text-based engraving program, sits somewhat in the tradition of these earlier approaches.

Monophonic Codes: EsAC and Plaine and Easie

Two coding systems that have been unusually durable are the Essen Associative Code (EsAC) and Plaine and Easie. Each is tied to a long-term ongoing project. Both are designed for monophonic music (music for one voice only). Both have been translated into other formats, thus realizing greater potential than the originators imagined.

EsAC

The purpose of EsAC was to encode European folksongs in a compact format. EsAC has a fascinating pre-history, which is rooted in the widespread collections of folksong made in the nineteenth and early twentieth centuries, when German was spoken across a wide swatch of the Continent.

A (typescript) code was developed to "notate" each song according to a single set of rules. Some metadata (titles, locales) and musical parameters (meter, key) were noted. The script was necessarily based on ASCII characters. [The underlying code was used for typescript "transcriptions" in unedited collections in Central and Eastern Europe (Austria, Croatia, Poland, Slovenia, Serbia) and elsewhere.]

Adaptation of this material to mainframe computers was begun in the 1970s by the Deutsche Volkslied Archiv (DVA) in Frieburg im Breisgau (Germany). An pioneering work of musical analysis across the different repertories encoded was completed by Wolfram Steinbeck (1982). This inspired the late Helmut Schaffrath, an active member of the International Council for Traditional Music, to adapt some of the DVA materials to a minicomputer. Several of his students at the Essen Hochschule für Musik (later renamed the Folkwang Universität der Künste) wrote software to implement analysis routines commonly applied to folksongs. The subset became the Essen Folksong Collection; the code, correspondingly, became EsAC. Schaffrath's work, which took a separate direction from that of the DVA, was carried out between 1872 and his death (1994). The work was later continued by Ewa Dahlig-Turek at the EsAC data website.

The EsAC encoding system is easily understood within the context of its original purposes: it is designed for music which is monophonic. Although all the works have lyrics, the encoding system was not designed to accommodate lyrics. (Efforts to add them have disclosed certain limitations of the system.) The range of a human voice rarely exceeds three octaves, but given that the ideal tessitura varies from one person to another, the concept of a fixed key is of neglible importance. Pitch is therefore encoded according to a relative system accommodating a three-octave span [Table 2.]

A smallest duration, given in header (preliminary) information, is taken as the default value. The increments of longer note values are indicated by underline characters. Sample files are shown in thus handout of EsAC samples. Further details are given in Beyond MIDI, Ch. 24.

Plaine and Easie

The purpose of Plaine and Easie code was to prepare virtual catalogues of musical manuscripts.

Polyphonic Codes: DARMS and SCORE

XML-Oriented Codes: MuseSCORE

Sound-Related Codes

MIDI

Music V and CSound

Conducting Codes

Systems for Synchronization of Notation, Sound, and Analysis

MuseData

Humdrum kern

Codes for Data Interchange

Applications of Musical Information

References

1. Musical symbol list (incomplete): http://en.wikipedia.org/wiki/List_of_musical_symbols

2. Repp, Bruno. "Variations on a Theme by Chopin: Relations between Perception and Production of Timing in Music," Journal of Experimental Psychology: Human Perception and Performance, 24/3 (1998), 791-811 [ http://www.brainmusic.org/EducationalActivitiesFolder/Repp_Chopin1998.pdf]

3. Steinbeck, Wolfram. Struktur und Ähnlichkeit. Methoden automatisierter Melodienanalyse (= Kieler Schriften zur Musikwissenschaft 25). Habilitationsschrift. Kassel, 1982.