Difference between revisions of "Music 253"

| (146 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | See also the [[Music 253/CS 275a Syllabus]]. | |

| + | |||

| + | __TOC__ | ||

==What is Musical Information?== | ==What is Musical Information?== | ||

<i>Musical information</i> (also called <i>musical informatics</i>) is a body of information used to specify the content of a musical work. There is no single method of representing musical content. Many digital systems of musical information have evolved since the 1950s, when the earliest efforts to generate music by computer were made. In the present day several branches of musical informatics exist. These support applications concerned mainly with sound, mainly with graphical notation, or mainly with analysis. | <i>Musical information</i> (also called <i>musical informatics</i>) is a body of information used to specify the content of a musical work. There is no single method of representing musical content. Many digital systems of musical information have evolved since the 1950s, when the earliest efforts to generate music by computer were made. In the present day several branches of musical informatics exist. These support applications concerned mainly with sound, mainly with graphical notation, or mainly with analysis. | ||

| − | <i>Musical representation</i> generally refers to a broader body of knowledge with a longer history, spanning both digital and non-digital methods of describing the nature and content of musical material. The syllables do-re-mi (identifying the first three notes of an ascending scale) can be said to <i>represent</i> the beginning of a scale. Unlike graphical notation, which indicates exact pitch, this representation scheme is moveable. It pertains to the first three notes of any ascending scale, irrespective of its pitch. | + | <i>Musical representation</i> generally refers to a broader body of knowledge with a longer history, spanning both digital and non-digital methods of describing the nature and content of musical material. The syllables do-re-mi (identifying the first three notes of an ascending scale) can be said to <i>represent</i> the beginning of a scale. Unlike graphical notation, which indicates exact pitch, this representation scheme is moveable. It pertains to the first three notes of any ascending scale, irrespective of its pitch. |

| + | |||

| + | ==Applications of Musical Information== | ||

| + | Apart from obvious uses in recognizing, editing, and printing, musical information supports a wide range of applications in certain areas of real and virtual performance, creative expression, and musical analysis. While it is impossible to treat any of these at length, some sample applications are shown here. | ||

| − | == | + | ==Parameters, Domains, and Systems== |

===Parameters of Musical Information=== | ===Parameters of Musical Information=== | ||

| + | Two kinds of information--<i>pitch</i> and <i>duration</i>--are pre-eminent, for without [http://en.wikipedia.org/wiki/Pitch_(music) pitch] there is no sound, but pitch without duration has no substance. Beyond those two arrays of parameters, others are used selectively to support specific application areas. | ||

====Pitch==== | ====Pitch==== | ||

| − | + | Trained musicians develop a very refined sense of pitch. Systems for representing pitch span a wide range of levels of specificity. Simple discrimination between ascending and descending pitch movements meet the needs of many young children, while elaborate systems of microtonality exist in some cultures. | |

There are many graduated continua--diatonic, chromatic, and enharmonic [http://en.wikipedia.org/wiki/Musical_scale scales]--for describing pitch. Absolute measurements such as frequency can be used to describe pitch, but for the purposes of notation and analysis other nomenclature is used to relate a given pitch to its particular musical context. | There are many graduated continua--diatonic, chromatic, and enharmonic [http://en.wikipedia.org/wiki/Musical_scale scales]--for describing pitch. Absolute measurements such as frequency can be used to describe pitch, but for the purposes of notation and analysis other nomenclature is used to relate a given pitch to its particular musical context. | ||

| + | |||

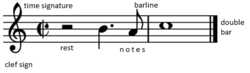

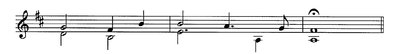

| + | [[File:GeneralExample1.png|250px|thumb|right|Basic elements of musical notation.]] | ||

====Duration==== | ====Duration==== | ||

| − | Duration has contrasting features: how long a single note lasts is entirely relative to the rhythmic context in which it exists. Prior to the development of the Musical Instrumental Digital Interface (MIDI) in the 1980s the metronome was the only widely used tool to calibrate the pace of music (its tempo). MIDI provides a method of calibration that facilitates capturing very slight differences of the execution in order to "record" performance in a temporally precise way. In most schemes of music representation values are far less precise. Recent psychological studies have demonstrated that while human expectations of pitch are precise, a single piece of music accommodates widely discrepant executions of rhythm. People can be conditioned to perform music in a rote manner, with little variation from one performance to another, but deviation from a regular beat is normal. | + | [http://en.wikipedia.org/wiki/Duration_(music) Duration] has contrasting features: how long a single note lasts is entirely relative to the rhythmic context in which it exists. Prior to the development of the [http://en.wikipedia.org/wiki/Musical_Instrument_Digital_Interface Musical Instrumental Digital Interface (MIDI)] in the mid-1980s the [http://en.wikipedia.org/wiki/Metronome metronome] was the only widely used tool to calibrate the pace of music (its tempo). MIDI provides a method of calibration that facilitates capturing very slight differences of the execution in order to "record" performance in a temporally precise way. In most schemes of music representation values are far less precise. Recent psychological studies have demonstrated that while human expectations of pitch are precise, a single piece of music accommodates widely discrepant executions of rhythm. People can be conditioned to perform music in a rote manner, with little variation from one performance to another, but deviation from a regular beat is normal. |

====Other Dimensions of Musical Information==== | ====Other Dimensions of Musical Information==== | ||

| Line 22: | Line 30: | ||

===Domains of Musical Information=== | ===Domains of Musical Information=== | ||

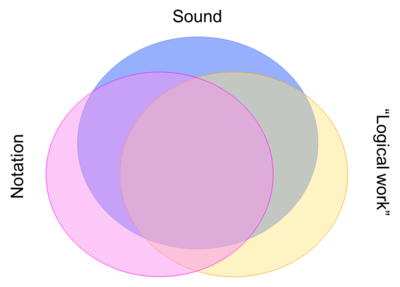

| − | Applications of musical information (or data) are said to exist in various <i>domains</i>. | + | Applications of musical information (or data) are said to exist in various <i>domains</i>. These domains partially overlap in the information they contain, but each contains domain-specific features that cannot be said to belong to the work as a whole. The three most important domains are those of graphics (notation), sound, and that content not dependent on either (usually called the "logical" work). The range of parameters which are common to all three domains, though difficult to portray precisely, is much smaller than the composite list of all of them, as the illustration below attempts to show. |

| + | |||

| + | [[File:ThreeDomains_2012.png|400px|thumb|center|Schematic view of the three main domains of musical information.]] | ||

| + | |||

====The Graphical (Notational) Domain==== | ====The Graphical (Notational) Domain==== | ||

| − | The <i>notation</i> domain requires extensive information about placement on a page or screen. | + | The <i>notation</i> domain requires extensive information about placement on a page or screen. Musical notation works very differently from text for two main reasons: (1) every note simultaneously represents an arbitrary number of [http://en.wikipedia.org/wiki/Parameters parameters] of sound and (2) most notated scores are [http://en.wikipedia.org/wiki/Polyphony polyphonic]. |

| + | |||

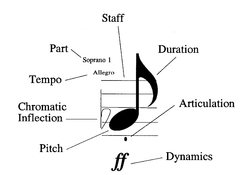

| + | [[File:TheNote.bmp|250px|thumb|right|Attributes of a single musical note. Each attribute suggests the need for a separate encoded parameter.]] | ||

| + | |||

| + | In contrast to scanned images of musical scores, encoded scores facilitate the analysis and re-display of these many parameters, singly or in any combination. Extracted parameters can also be recombined to create new works (a topic treated at Stanford in a separate course, Music 254/CS 275B). | ||

====The Sound Domain==== | ====The Sound Domain==== | ||

| − | The <i>sound</i> domain requires information about musical [http://en.wikipedia.org/wiki/Timbre timbre]. In the notation domain a [http://en.wikipedia.org/wiki/Crescendo#Gradual_changes dynamic change] may be represented by a single symbol, but in the sound domain a dynamic change will affect the parameters of a series of notes. | + | The <i>sound</i> domain requires information about musical [http://en.wikipedia.org/wiki/Timbre timbre]. In the notation domain a [http://en.wikipedia.org/wiki/Crescendo#Gradual_changes dynamic change] may be represented by a single symbol, but in the sound domain a dynamic change will affect the parameters of a series of notes. In relation to notated music sound makes phenomenal what is in the written context merely verbal (tempo, dynamics) or symbolic (pitch, duration). In its emphasis on the distinctios between verbal prescriptions and actual (sounding) phenomena, [http://en.wikipedia.org/wiki/Semiotic semiotics] offers a ready-made intellectual framework for understanding music encoding in relation to musical sound. |

====The Logical Domain==== | ====The Logical Domain==== | ||

| − | The <i>logical</i> domain of music representation is somewhat elusive | + | The <i>logical</i> domain of music representation is somewhat elusive in that while it retains parameters required to fully represent a musical work, it shuns information that is exclusively concerned with sight or sound. Pitch and duration remain fundamental to musical identity, but whether eighth notes are beamed together or have separate stems is exclusively a concern of printing. If, however, beamed groups of notes are considered (in a particular context) to play an important role in some aspect of visual cognition, they may be considered conditionally relevant. The parameters and attributes encoded in a "logical" file are therefore negotiable and, from one case to the next, may seem ambiguous or contradictory. |

===Systems of Musical Information=== | ===Systems of Musical Information=== | ||

| − | Domain differences favor domain-specific approaches: it is more practical to develop and employ code that is task- or repertory-specific than to disentangle large numbers of variables addressing multiple domains in a "complete" representation system. | + | Many parametric codes for music have been invented without an adequate consideration of their intended purpose and the consequent requirements. A systematic approach normally favors a top-down consideration of which domain(s) will be emphasized. Many parameters are notation-specific, while others are sound-specific. |

| + | |||

| + | Domain differences favor domain-specific approaches: it is more practical to develop and employ code that is task- or repertory-specific than to disentangle large numbers of variables addressing multiple domains in a "complete" representation system. However, if the purpose is to support interchange between domains (as is often the cases with music-information retrieval and music analysis) then planning now to link information across domains becomes very important. | ||

====Notation-oriented schemes==== | ====Notation-oriented schemes==== | ||

| − | Notation programs favor the <i>graphical</i> domain because notated music is only comprehensible if the spatial position of every object is correct and if the visual relationships between object are precise. Common Western Notation (CMN) is | + | Notation programs favor the <i>graphical</i> domain because notated music is only comprehensible if the spatial position of every object is correct and if the visual relationships between object are precise. Particularly in Common Western Notation (CMN), there is an elaborate visual grammar. There are also national conventions and publisher-specific style requirements to consider. Here we concentrate on CMN but emphasize as the requirements of music change, the [http://www.britannica.com/EBchecked/topic/399202/musical-notation?anchor=ref530045 manner in which music is notated] also changes. Non-common notations often require special software for rendering. |

| + | |||

| + | Notational information links poorly with sound files. It is optimized for rapid reading and for the quick comprehensive of performers. At an abstract level, the visual requirements of notation have little to do with sound. Beamed groups provide an apt example of the need to facilitate rapid comprehension. Beams mean nothing in sound. Barlines are similarly silent, but they enable users maintain a steady meter. | ||

====Sound-oriented schemes==== | ====Sound-oriented schemes==== | ||

| − | <i> | + | Although notation describes meter and rhythm, it does so symbolically. In an ideal sense, no time need elapse for this explanation. In sound files, however, <i>time</i> is a fundamental variable. [http://en.wikipedia.org/wiki/Time_(music) <i>Time</i>] operates simultaneously on two (sometimes more) levels. [http://en.wikipedia.org/wiki/Meter_(music) <i>Meter</i>] gives an underlying pulse which is never entirely metronomic in performance (Repp [3]). Whereas in a score, the intended meter can usually be determined from one global parameter, in a sound file it must be deduced. Then the object of interest becomes the deviation from this implied steadiness for every sounding pitch. |

| + | |||

| + | Apart from pitch and duration, other important qualities of sounding music are timbre, dynamics, and tempo. [http://en.wikipedia.org/wiki/Timbre <i>Timbre</i>] defines the quality of the sound produced. Many factors underlie differences in timbre. [http://en.wikipedia.org/wiki/Dynamics_(music) <i>Dynamics</i>] refer to levels of volume. [http://en.wikipedia.org/wiki/Tempo <i>Tempo</i>] refers to the speed of the music. Relative change of both dynamics and tempo are both important in engaging a listener's attention. | ||

| + | |||

| + | Sound files are synchronized with notation files only with difficulty. This is mainly because what is precise is one domain is vague in the other and vice-versa. | ||

| − | + | ===Data Acquisition (Input)=== | |

| + | ====For notation==== | ||

| + | Many systems for the acquisition of musical data exist. The two most popular forms of capture today are (1) from a musical keyboard (via MIDI) and (2) via optical recognition (scanning). Both provide incomplete data for notational applications: keyboard capture misses many features specific to notation (but not shared by sound--beams, slurs, ornaments, and so forth); optical recognition is an imperfect science that can fail to "see" or misinterpret arbitrary symbols. Commercial programs for optical recognition tend to focus of providing MIDI data rather than a full complement of notational features. The details of what may be missed, or misinterpreted by the software, vary with the program. In either of the above cases serious users must modify files by hand. | ||

| − | + | Hand-correction of automatically acquired materials can be very costly in terms of time. For some repertories complete encoding by hand may save time and produce a more professional result, but outcomes vary significantly with software, user familiarity with the program, and the quantity of detail beyond pitch and duration that is required. | |

| − | |||

| − | + | Statistical measures give little guidance on the efficiency of various input methods. A claimed 90% accuracy rate means little if barlines are in the wrong place, clef changes have been ignored, text underlay is required, and so forth. | |

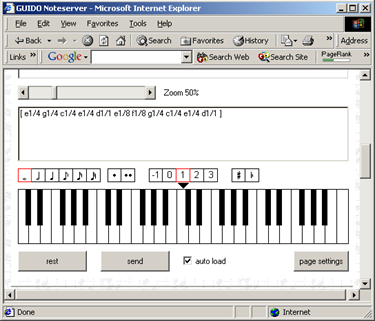

| − | + | [[File:GuidoKeyboard.png|425px|thumb|left|Virtual keyboard used by the [https://en.wikipedia.org/wiki/GUIDO_music_notation Guido Notation System]. Pitch can be entered from it. Duration is specified from the menu of note values above the keyboard. The resulting code, which can also be written by hand, is shown in the upper window.]] | |

| − | + | Visual prompts for data entry to a virtual keyboard may help novices come to grips with the complexity of musical data. Musicians are accustomed to grasping from conventional notation several features--pitch, duration, articulation--from one reading pass because a musical note combines information about these features in one symbol. However, computers cannot reliably decode these synthetic lumps of information. | |

| − | |||

| − | ==== | + | ====For sound==== |

| − | + | MIDI is the preferred way to capture sound data to a file that can be processed for diverse purposes. MIDI provides a convenient way to store sound data for further use and for sharing. It is frequently used to generate notation and is useful for rough sketches of a work. Its capabilities for refined notation are limited, particularly by its inarticulate represent of pitch. | |

| − | + | ====For logical information==== | |

| − | + | Logical information is usually encoded by hand or acquired by data translation from another format. For simple repertories and short pieces logical scores may be acquired from MIDI data, but if the intended use is analytical, then verification of content is so important that manual data entry may be more efficient. | |

| − | + | ===Data Output=== | |

| + | All schemes of music representation are based on the assumption that users will wish to access and extract data. When output does not match a user's expectations it can be difficult to attribute a cause. Errors can result both from flawed or incomplete data and from inconsistencies or lapses in the processing software. However, all schemes of music representation are necessarily incomplete, because music itself is constantly in flux. | ||

| − | + | ====From notation software==== | |

| + | The extraction of data sufficient to provide conventional music notation requires a considerable number of parameters. Hand correction is almost always necessary for scores of professional quality. | ||

| − | + | Lapses are greatest for repertories that do not entirely fall within the bounds of Common Western Notation (European-style music composed between c. 1650 and 1950) or which have an exceptionally large number of symbols per page (much of the music of the later nineteenth century, such as that of [http://en.wikipedia.org/wiki/Giuseppe_Verdi Verdi] and [http://en.wikipedia.org/wiki/Tchaikovsky Tchaikovsky]). Even some popular repertories are difficult to reproduce (usually because of unconventional requirements) with complete accuracy. | |

| − | ==== | + | ====From sound-generating software==== |

| − | The | + | The ear is a quicker judge than the eye but also a less forgiving one. Errors in sound files are usually conspicuous, but editing files can be difficult, but this depends mainly on the graphical user interface provided by the sound software. |

| − | ==== | + | ====From logical-data files==== |

| − | + | Logical-data files are difficult to verify for accuracy and completeness unless they can be channeled either to sound or notational output of some kind. This means that the results of analytical routines run on them can be flawed by the data itself, though the algorithms used in processing must be examined as well. | |

| − | == | + | ==Schemes of Representation== |

===Notation Codes=== | ===Notation Codes=== | ||

Schemes for representing music for notation and display range from lists of codes for the production of musical symbols such as CMN to extensible languages in which symbols are interrelated and may be prioritized in processing. Some notation codes are essentially printing languages in their own right but may lose any sense of musical logic in their assembly. | Schemes for representing music for notation and display range from lists of codes for the production of musical symbols such as CMN to extensible languages in which symbols are interrelated and may be prioritized in processing. Some notation codes are essentially printing languages in their own right but may lose any sense of musical logic in their assembly. | ||

| − | + | Some users judge notation codes by the aesthetic qualities of the output. Professional musicians tend to prefer notation that is properly spaced and in which the proportional sizes of objects clarify meaning and facilitate easy reading. For this reason notation software is heavily laden with spacing algorithms and provides the user with many utilities to altering automatic placement and size. | |

Musical notation is not an enclosed universe. In the West it has been in development for a millennium. In many parts of the Third World cultural values discourage the use of written music other than in clandestine circles of students of one particular musical practice. Contemporary composers constantly stretch the limits of Conventional Western Notation, guaranteeing that graphical notation will continue to evolve. | Musical notation is not an enclosed universe. In the West it has been in development for a millennium. In many parts of the Third World cultural values discourage the use of written music other than in clandestine circles of students of one particular musical practice. Contemporary composers constantly stretch the limits of Conventional Western Notation, guaranteeing that graphical notation will continue to evolve. | ||

| − | + | A fundamental variable of approaches to notation is that of the conceptual organization of a musical work, coupled with the order in which elements are presented. Scores can be imagined as consisting of parts, measures, notes, pages, sections, movements, and so forth. Only one of these can be taken as fundamental; other elements must be related to it. | |

| − | |||

| − | Also online is a manual describing [http://icking-music-archive.org/software/musixtex/musixdoc.pdf MusiXTeX], a music-printing system based on [http://en.wikipedia.org/wiki/TeX TeX], a typesetting language widely used in scientific publishing. MusiXTeX is one of several dialects of music printing in the TeX universe. | + | Early representation systems encoded works page by page. More recent systems lay a score out on a horizontal scroll that can be segmented according to page layout instructions provided by the user. |

| + | |||

| + | ====Monophonic Codes: EsAC and Plaine and Easie Code==== | ||

| + | Two coding systems that have been unusually durable are the <i>Essen Associative Code</i> (EsAC) and <i>Plaine and Easie</i>. Each is tied to a long-term ongoing project. Both are designed for monophonic music (music for one voice only). Both have been translated into other formats, thus realizing greater potential than the originators imagined. | ||

| + | |||

| + | =====EsAC===== | ||

| + | The purpose of EsAC was to encode European folksongs in a compact format. EsAC has a fascinating pre-history, which is rooted in the widespread collections of folksong made in the nineteenth and early twentieth centuries, when German was spoken across a wide swatch of the Continent. | ||

| + | |||

| + | A (typescript) code was developed to "notate" each song according to a single set of rules. Some metadata (titles, locales) and musical parameters (meter, key) were noted. The script was necessarily based on ASCII characters. [The underlying code was used for typescript "transcriptions" in unedited collections in Central and Eastern Europe (Austria, Croatia, Poland, Slovenia, Serbia) and elsewhere.] | ||

| + | |||

| + | Adaptation of this material to mainframe computers was begun in the 1970s by the [http://www.dva.uni-freiburg.de/ Deutsche Volkslied Archiv (DVA)] in [http://en.wikipedia.org/wiki/Freiburg_im_Breisgau Freiburg im Breisgau] (Germany). An pioneering work of musical analysis across the different repertories encoded was completed by Wolfram Steinbeck (1982). This inspired the late Helmut Schaffrath, an active member of the International Council for Traditional Music, to adapt some of the DVA materials to a minicomputer. Several of his students at the Essen Hochschule für Musik (later renamed the [http://www.folkwang-uni.de/home/musik/ Folkwang Universität der Künste]) wrote software to implement analysis routines commonly applied to folksongs. The subset became the Essen Folksong Collection; the code, correspondingly, became EsAC. Schaffrath's work, which took a separate direction from that of the DVA, was carried out between 1872 and his death (1994). The work was later continued by [http://pl.wikipedia.org/wiki/Ewa_Dahlig-Turek Ewa Dahlig-Turek] at the [http://www.esac-data.org/ EsAC data website]. | ||

| + | |||

| + | The EsAC encoding system is easily understood within the context of its original purposes: it is designed for music which is monophonic. Although all the works have lyrics, the encoding system was not designed to accommodate lyrics. (Efforts to add them have disclosed certain limitations of the system.) The range of a human voice rarely exceeds three octaves, but given that the ideal <i>tessitura</i> varies from one person to another, the concept of a fixed key is of neglible importance. Pitch is therefore encoded according to a relative system accommodating a three-octave span [Table 2.] | ||

| + | |||

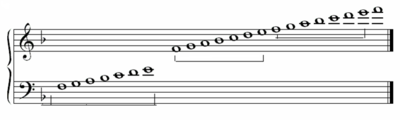

| + | [[File:Essen_gamut.png|400px|thumb|center|The EsAC three-octave "gamut". Pitches are represented by the integers 1..7. The central octave is unsigned. Pitches in the lower octave are preceded by a minus sign (-); pitches in the upper octave by a plus sign (+).]] | ||

| + | |||

| + | A smallest duration, given in header (preliminary) information, is taken as the default value. The increments of longer note values are indicated by underline characters. Sample files are shown in thus handout of [[Media:EsAC-Samples.PDF| EsAC samples]]. Further details are given in [http://www.ccarh.org/publications/books/beyondmidi/ <i>Beyond MIDI</i>], Ch. 24. | ||

| + | |||

| + | =====Plaine and Easie Code===== | ||

| + | The purpose of [http://www.iaml.info/activities/projects/plain_and_easy_code Plaine and Easie (P&E)] code was to prepare virtual catalogues of musical manuscripts. It was designed to give an exact, complete description of every indication on a musical score. Although only incipits (beginning passages) are encoded, a P&E encoding might include a tempo and dynamics; grace notes, appoggiaturas, and other [http://en.wikipedia.org/wiki/Appoggiatura#Appoggiatura ornaments]; marks for [http://en.wikipedia.org/wiki/Articulation_(music) staccato and legato], and so forth. It will be based on the original, unedited music including [http://en.wikipedia.org/wiki/Clef clef signs] rarely seen today and word spellings that may be obsolescent. | ||

| + | |||

| + | P&E has always been associated with the international online catalogue of (notated) music sources developed by [http://www.rism.info/ RISM]. The online project was originally focused on music manuscripts from the seventeenth and eighteenth centuries, but parallel projects initially committed to paper are scheduled to appear online. At this writing (January 2014) the online [http://opac.rism.info/index.php?id=2&L=1 RISM database] of manuscripts contains <i>c</i>. 875,000 entries, which come from more than 60 countries. This constellation of projects was begun in 1952. The database structure in which the musical data exists has more than a hundred text fields and is searchable in many ways. Links to the digitized manuscripts directly from the RISM listing are updated monthly. | ||

| + | |||

| + | ====Typesetting Codes: CMN, MusiXTeX, Lilypond, Guido, ABCplus==== | ||

| + | Typesetting codes make extensive provision for the appearance of notated music. Typesetting has a great need for parameters (for page size and orientation, font information, a long list of relationships--between staff lines, between lyrics and adjacent staves, between syllables of a text, between titles, headers and staves, and in the placement and orientation of rests, fermatas, beams, slurs, and so forth. Earlier typesetting-oriented codes may begin with machine-specific indications for a hardware environment, although over time scalable [http://en.wikipedia.org/wiki/Vector_graphics_editor vectors] have largely replaced this kind of specificity. Other approaches, such as [http://en.wikipedia.org/wiki/Common_Music_Notation CMN], an open-source music description language, focus mainly on objects and leave issues of placement open. An [https://ccrma.stanford.edu/software/cmn/cmn/cmn.html online manual] for this lisp-based representation, predominately by Bill Schottstaedt, is available at Stanford. | ||

| + | |||

| + | Also online is a manual describing [http://icking-music-archive.org/software/musixtex/musixdoc.pdf MusiXTeX], a music-printing system based on [http://en.wikipedia.org/wiki/TeX TeX], a typesetting language widely used in scientific publishing. MusiXTeX is one of several dialects of music printing in the TeX universe. MusiXTeX was used to create the large [http://en.wikipedia.org/wiki/Werner_Icking_Music_Archive Werner Icking Music Archive] of music scores. | ||

The evolution of typesetting from metal through photo-offset to modern digital preparation and printing necessarily changed approaches to music printing. [http://lilypond.org/text-input.html Lilypond], a text-based engraving program, sits somewhat in the tradition of these earlier approaches. | The evolution of typesetting from metal through photo-offset to modern digital preparation and printing necessarily changed approaches to music printing. [http://lilypond.org/text-input.html Lilypond], a text-based engraving program, sits somewhat in the tradition of these earlier approaches. | ||

| − | ==== | + | Two more collaborative programs for typesetting are Guido and ABCplus. [http://www.noteserver.org/salieri/nview/noteserver.html Guido] is designed for changeable typesetting instructions, for producing materials for websites, and for accommodating custom extensions. Fundamentals of Guido can be found at Craig Sapp's [[Guido_Music_Notation|Guido tutorial]]. |

| + | |||

| + | ABCplus builds on the monophonic ABC code, which was originally developed for folksong melodies. Some of the history of the original code [http://en.wikipedia.org/wiki/Abc_notation history] is recounted on a wiki page. Craig Sapp provides [[ABC_Plus|brief instructions]] for and comments on its use. | ||

| + | |||

| + | ====Polyphonic Codes: DARMS and SCORE==== | ||

| + | Systems for producing full music scores that originated in the Sixties and Seventies (prior to the development of desktop systems) necessarily devised representation schemes that were compact. Data was stored mainly on large decks of punched cards with efficient way to edit data; input error rates were high. Processing time was long. What might seem today to be compromises with efficiency should not obscure the fact that restraints forced developers to be ingenious. Those with the patience to parse early systems will often glimpse valuable insights in the residues of systems such as DARMS. | ||

| + | |||

| + | =====DARMS===== | ||

| + | The Digital Alternative Representation of Musical Scores (DARMS) was unveiled at a summer school in 1966. Among those involved in the development of DARMS was [http://www.nytimes.com/1996/10/28/arts/stefan-bauer-mengelberg-a-conductor-69.html Stefan Bauer-Mengelberg]. [http://qcpages.qc.cuny.edu/music/index.php?L=1&M=120 Raymond Erickson] wrote an extensive manual for the DARMS seminar (State University of New York at Binghamton) of 1976. [http://en.wikipedia.org/wiki/Jef_Raskin Jef Raskin] was the first person to produce printed notation from DARMS code (Pennsylvania State University, 1966). | ||

| + | |||

| + | [[File:LuteTabFW.png|500px|thumb|right|Italian lute tablature facsimile and transcription based on DARMS extensions by Frans Wiering.]] | ||

| + | The encoding scheme was intended to be useable by encoders who did not read musical notation. With this aim in mind, pitch was represented by a note's vertical position relative to a clef, such that a note on the lowest line of five-line staff would always be "1". Duration was indicated by a letter code based on American usage (Q=quarter, W=whole, etc.). An extensive list of codes for articulation, dynamics, and so forth was developed. | ||

| + | |||

| + | [[File:DARMS_LinearDecomp_EX.bmp|400px|thumb|left| ]] | ||

| + | [[File:DARMS_LinearDecomp_code.bmp|400px|thumb|left|DARMS encoding using <i>linear decomposition</i> to express temporal relationships between voices.]] | ||

| + | DARMS had one feature that addressed a problem common to keyboard music and short scores: to represent a rhytymically independent second voice on a staff already containing one voice, DARMS used what was called linear decomposition. Linear decomposition (initiated by the sign "&") could have successive starting points, so that in an extended passage of this sort, it could be reinitiated at each beat, at each bar, or once throughout the passage. For processing software this variability amounted to a lack of predictability. However the problem is a pervasive one in encoding systems, and DARMS was respected for its (hypothetical) ability to accommodate such material. | ||

| + | |||

| + | DARMS evolved into several dialects and spawned many extensions for special purposes. Among them the <i>Note Processor</i> (by [http://www.apnmmusic.org/stephendydo.html Stephen Dydo]) offered the only single-user commercially available software product based on the code. [http://www.spoke.com/people/tom-hall-3e1429c09e597c10039ab848 Tom Hall] developed a production-oriented system based on DARMS for A-R Editions, Inc. (Madison, WI). In academic circles, Lynn Trowbridge developed DARMS extensions for mensural notation. [http://www.cs.uu.nl/staff/fransw.html Frans Wiering] did the same for lute tablature. | ||

| + | |||

| + | =====SCORE===== | ||

| + | While DARMS spawned a generation of experimentation and innovation, [http://en.wikipedia.org/wiki/SCORE_(software) SCORE] has largely been the work a single individual, [http://www.naxos.com/person/Leland_Smith/72179.htm Leland Smith], professor emeritus of music at Stanford University. A few auxiliary programs are described on the home page of [http://www.scoremus.com/ San Andreas Press], the vendor of SCORE. SCORE is generally regarded the notation program best suited to fully professional results. Noted for its esthetic qualities, SCORE features on openly documented encoding system. | ||

| + | |||

| + | SCORE was originally developed at Stanford University's [http://infolab.stanford.edu/pub/voy/museum/pictures/AIlab/list.html artificial intelligence lab] as a mainframe computer application that could produce music of arbitrary complexity. The research began in 1967 but the earliest printing was produced in 1971 via a plotter. In the 1980s SCORE was moved to [http://en.wikipedia.org/wiki/DOS DOS]-based computers, and late in the 1990s it was revised for the Windows environment. At this writing (2012) the SCORE program remains in [http://en.wikipedia.org/wiki/Fortran FORTRAN]. | ||

| + | |||

| + | Two features of 1970s plotters account for basic features of score: (1) plotters required [http://en.wikipedia.org/wiki/Cartesian_coordinate_system X, Y coordinates vertical/horizontal coordinate] information and (2) plotters were well suited to producing [http://en.wikipedia.org/wiki/Vector_graphics vector graphics]. With respect to music, the first principle accounts for SCORE's <i>parametric</i> layout, which places every object in relation to an exact vertical/horizontal point. (All printing programs need to give an account of placement, but in most commercial software packages these details are hidden from the user or seen only in tiny glimpses on screens for refined editing.) The vector graphics used by SCORE anticipated the later development of [http://en.wikipedia.org/wiki/PostScript_fonts PostScript] fonts, including Adobe's [http://partners.adobe.com/public/developer/en/font/5045.Sonata.pdf <i>Sonata</i>] (1987), the earliest such independent font for music. | ||

| + | |||

| + | Data entry in SCORE is achieved in a five-pass procedure. The first two passes encode pitch and duration. The succeeding passes (articulation ornamentation, and dynamics; beams; slurs) are optional; their use depends on the details of the music at hand. The power of SCORE lies in (a) its extensive symbol library and free-drawing facility and (b) its conversion of input codes to an editable parametric format. Eighteen parametric fields give the user access to extremely detailed control of individual items through segregation by object class. Detailed coverage of SCORE's data entry procedures are provided in Craig Sapp's [http://www.ccarh.org/courses/253/handout/scoreinput/ SCORE manual]. | ||

| + | |||

| + | ====Shareware and Open-Source Codes==== | ||

| + | |||

| + | =====Lilypond===== | ||

| + | Lilypond, initiated in 1996 by Han-Wen Nienhuys and Jan Nieuwenhuizen, is a non-interactive but feature-rich typesetting program for music. It was derived from MusiXTeX, a TeX typesetting program for music. As a text-based program, it does not have a graphical user interface. Examples and further information are available at the [https://en.wikipedia.org/wiki/Lilypond GNU Lilypond] entry in Wikipedia. | ||

| − | ==== | + | =====MuseScore===== |

| − | + | Unlike built-from-the-ground up schemes of music representation, MuseScore capitalizes on components and formats already in existence to provide an open-source notation facility. Its main developer is Werner Schweer. MuseScore is built on the [https://en.wikipedia.org/wiki/Qt_%28framework%29 Qt] graphical user interface framework, which allows it to run on Apple OS X, Linux, and Mac operating systems. Its interface appears to be modeled on those of Finale and Sibelius. | |

| − | + | The underlying representation system of MuseScore is the markup language MusicXML. Files can be exported to compressed and uncompressed versions, as well as to Lilypond, [http://en.wikipedia.org/wiki/PDF Portable Document Format] (pdf), [http://en.wikipedia.org/wiki/Portable_Network_Graphics Portable Network Graphics] (png), [http://en.wikipedia.org/wiki/Svg Scalable Vector Graphics] (svg), [http://en.wikipedia.org/wiki/PostScript PostScript] (ps), MIDI (mid), and the audio format HE-ACC (m4a). Brief instructions for use and user links can be found at this [http://wiki.ccarh.org/wiki/MuseScore website]. | |

===Sound-Related Codes=== | ===Sound-Related Codes=== | ||

====MIDI==== | ====MIDI==== | ||

| + | The Musical Instrument Digital Interface (MIDI) is, strictly speaking, a hardware interface. Key number is a proxy for pitch; a clock built into an electronic keyboard stamps event initiations and releases. Both of these provisions (the first being vague relative to other systems of representation, the second beyond far more exact than most) are captured in a Standard MIDI File (SMF). Music "in MIDI" usually refers to music in the Stanford MIDI File Format. | ||

| + | |||

| + | The vast installed base of MIDI instruments and websites offering MIDI files demonstrates a heavy reliance on the format for many perfunctory tasks (teaching, amateur music-making, etc.). However, the 8-bit architecture (1988 for the file format; the hardware was in circulation for 3-5 years before that) has significant limitations. MIDI is not easily extensible. Individual manufacturers provide many proprietary extensions, but official changes must be approved by the MIDI Manufacturers' Association (MMA). | ||

| + | |||

| + | =====Main parameters and global-variable equivalents===== | ||

| + | The hardware influence is very evident in the file format, which supports only five parameters. Of these key-number (pitch) and duration (via note-on, note-off messages) are the most important. What would be called global variables in other systems (time signature, key signature, etc.) are <i>meta-events</i> in MIDI (they don't contribute to elapsed time). | ||

| + | |||

| + | Other important indicators are the number of (clock) ticks per quarter note and the number of 32nd notes for quarter note (distinguishing duple from triple subdivisions of the beat). | ||

| + | |||

| + | =====SMF Sections===== | ||

| + | MIDI files are divided into <i>chunks</i>. Because MIDI data is tightly packed, the <i>Header Chunk</i> must indicate its own length. MIDI files are difficult to parse and this information is essential. The Header Chunk will also indicate the MIDI format type (rarely other than "1" today) and the number of tracks (usually equivalent to the number of voices or instruments). | ||

| + | |||

| + | Each <i>Track Chunk</i> gives a list of events, with their time-stamps, constituting that track. Every <i>Note-on</i> event must have a corresponding <i>Note-off</i> event. | ||

| + | |||

| + | =====General MIDI Instrument Specifications===== | ||

| + | MIDI files from the 1980s may use arbitrary instrument specifications. The General MIDI specifications have standardized usage over time. However, a few labels are misleading. "Pizzicato violin", for example, is not timbrally different from violin, but for MIDI manufacturers the label signifies an event that is terminated long before its logical time allowance has expired. Thus it is a convenient label for managing a specific sound constraint. The "science" of fine-tuning timbral quality as produced by MIDI instruments remains a field of continuing search for more true-to-life timbres. Timbral differntiation also varies from model to model and manufacturer to manufacturer. | ||

| + | |||

| + | =====Efforts to Refine and Extend the SMF===== | ||

| + | Many viable proposals for extensions and refinement have been made over the past 25 years. While none have been adopted by the MMA, individual developers can point to many innovations that employ MIDI with adjunctive capabilities--for more accurate transcription of musical notation from MIDI input to controllers for varying dynamics and other expressive features in live performance involving electronic instruments. | ||

| + | |||

====Music V and CSound==== | ====Music V and CSound==== | ||

| − | ====Conducting | + | ====Conducting Cues and Expressive Overlays==== |

| + | A few systems have been developed as adjuncts to well established codes. For example Max Mathews' Radio Baton conducted (in three-dimensional space) a track added to MIDI files. In this case a performer (of an electronic file) could use one hand to conduct by beat while controlling tempo or articulation with the other hand. | ||

| + | |||

| + | ===Synchronic Approaches to Music Representation=== | ||

| + | In contrast to a host of codes that are tailored to one purpose, one hardware platform, or one software environment, the need to support several purposes was recognized in the 1980s. Two still used approaches that aim to support multiple domains and purposes are decribed below. (Cross-platform inferfaces had to wait until the later 1990s and still may suffer from slow performance.) | ||

| − | |||

====MuseData==== | ====MuseData==== | ||

| − | + | MuseData, originated and developed by Walter B. Hewlett since 1982, rests on the notion that music can be encoded in such a way that professional typesetting can be supported without sacrificing the possibility of exporting information appropriate for MIDI output (for proof-listening) and feature sets for musical analysis. The MuseData system has been used to encode large repertories and large works, for the production of corresponding parts, as an archival encoding for direct data translation, and for humanized MIDI virtual "performances". | |

| − | ==== | + | ====Humdrum (kern)==== |

| + | |||

| + | ==Basic Issues in Music Representation== | ||

| + | |||

| + | ===Structural issues in data organization and processing=== | ||

| + | In order for simple encoding to be scalable to the needs of of full-score production, code conversion, and bilateral support for sound production, the code itself needs to have a clear organizational structure. Two main areas of need are those of page layout and repetition and/or recapitulation of whole sections of a polyphonic movement. | ||

| + | |||

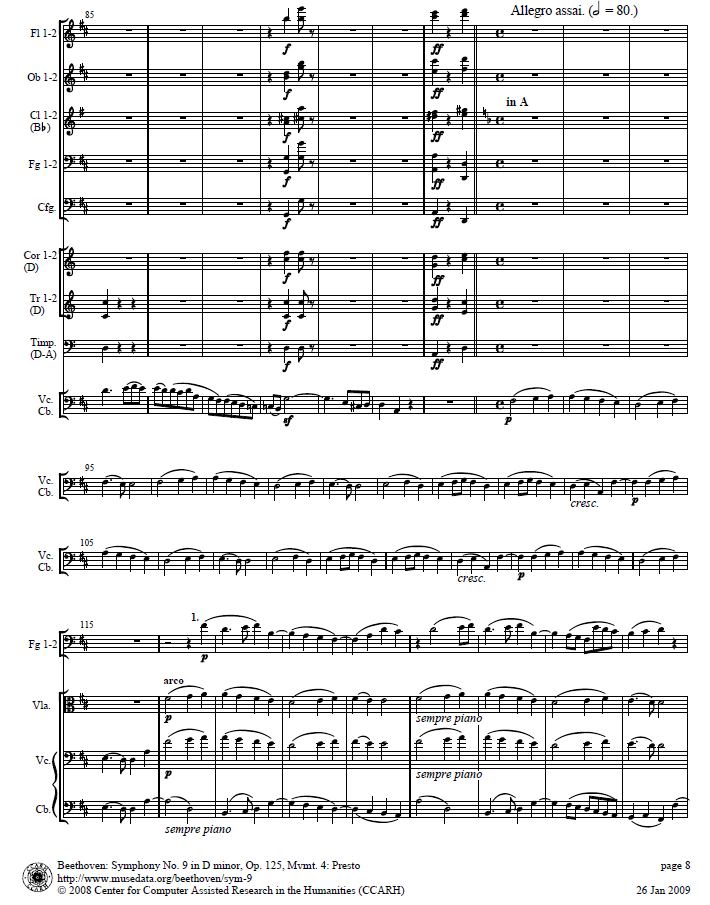

| + | [[File:ScoreOrg.png|600px|thumb|center|Horizontal elements (systems, sub-systems, and individual parts; staff lines) aand vertical elements (bars; barlines and braces) in the first page of a musical score.]] | ||

| + | |||

| + | ====Vertical and horizontal aspects of score layout==== | ||

| + | Music is not read in one universal way. Conductors may sweep through all the parts in one bar, then pass to the next bar: their job is to synchronize the performance of other musicians. Individual musicians are likely to concentrate on their own part, thus reading horizontally from bar to bar. To the person laying out a score on a page, considerable attention is giving to system breaks and page breaks. Musicians hate to be left in suspense at a precipitous point. Sensible layout improves the flow of music when it is rendered. Poor judgment in layout undermines the musician's ability to make sense of music quickly. The denigrated "widows and orphans" of typesetting assume a more dominant role in the production of musical scores. It is often necessary to squeeze an extra bar or two onto a page to avoid creating these anomalies. | ||

| + | |||

| + | In processing musical data clarity of orientation is fundamental. An encoded score can be processed vertically (part by part iin each bar) or horizontally (bar and bar in each part); neither has an inherent advantage. There is, however, a related arbitrary choice in the functionality of notation programs: Should parts be encoded independently, ready to be spun off individually for performers? Or should the score be encoded synchronically, with parts to be extracted subsequently? There is no preferred answer to this question, but it is important for users to note the difference of orientation from one program to another, because no matter which way round it is, some adjustments will need to be made to the derivations. | ||

| + | |||

| + | 1. In <i>SCORE</i> (as its name implies) the score is system by system; systems are assembled into pages; pages into scores. One needs a draft score on which to base the layout, because layout is specified in the encoding (and modified through a later editing stage). Parts are then derived from the score. | ||

| + | |||

| + | 2. In <i>MuseData</i>, encoding is accomplished part by part. Parts are assembled into scores for full movements; movements are combined to form complete works. Some details of layout are defined in the editing stage; small refinements can be made in a later stage. Parts are derived from the score in the interest of synchronizing any changes that may have been made in editing. | ||

| + | |||

| + | 3. In <i>Finale</i> and <i>Sibelius</i> many details of layout can be selected before encoding; the music entered (frequently via MIDI keyboard) is initially laid out on a horizontal scroll of arbitrary length, although this is typically seen as layout on a virtual page. The "squeeze" factor is easily invoked to improve layout; bars are easily added or subtracted. The production of parts is easily accomplished, either by command or by highlighting. | ||

| + | |||

| + | ====Visual Grammars for scores and parts==== | ||

| + | Many users notice small flaws in notation software and high overheads on correction time to achieve a result of professional quality. The vertical and horizontal aspects of score layout are impossible to fully automate in such a way as to guarantee a perfect result every time. There is simply too much variability of content concentrated in too little space. Fully automatic layout is recognizable by excessively broad bars (geared to accommodate the bars with the largest number of notes). Fully automatic layout is also hard to read: barlines that are at the same horizontal positions on every system blend into one another and form an uninterpretable grid. | ||

| + | |||

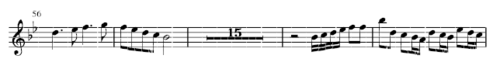

| + | [[File:MultiBarRest.png|500px|thumb|right|A 15-bar rest in the first violin part for the fifth movement of Vivaldi's concerto for flute, oboe, bassoon, and string orchestra RV 501.]] | ||

| + | =====Multi-bar rests===== | ||

| + | Correspondingly, the automatic production of parts from scores (or vice versa) will necessarily violate conventions of visual grammar. An example of a visual grammat is the use of a single symbol with a numeral to indicate a series of consecutive measures of silence in a part. In a score it is a rarity to find a bar of complete silence; in wind parts, however, there can be silences of many bars or pages. These are typically indicated in parts by multi-bar rests. | ||

| + | |||

| + | [[File:Divisi_Beethoven9_leadintoFinale.png|730px|thumb|left|Beethoven's Ninth Symphony, fourth movement, violoncello contrabass lead in to the Finale.]] | ||

| + | |||

| + | =====<i>Divisi</i> parts===== | ||

| + | The variability of participation from system to system creates more complex problems of layout in larger orchestral scores. Entire sections of the orchestra can drop out for pages. An automated layout system would retain a consistent number of parts system after system, page after page. In actual score-setting practice, though, long series of bars filled only with rests are omitted whenever possible. Their omission facilitates legibility and helps to contain the size of the score. | ||

| + | |||

| + | <i>Divisi</i> parts are more complicated. The term indicates that some of the time two or more instruments, or types of instruments, play together, while at others they have separate parts. In music of the eighteenth century two violins and two oboes may all play in unison in some passages, while in others the oboes may have a different part from the oboes, or (more confusingly) the first violin and first oboe may constitute one pair, the second violin and second oboe another. Such volatile arrangements do not change at the ends of movements; they change in the middle of movements, completely thwarting automated layout routines. | ||

| + | |||

| + | In the fourth movement of Beethoven's Ninth Symphony, for example, we see three groups of instruments on System 1: two flutes, two oboes, two bassoons, and a contrabassoon (Group 1); two horns, two trumpets, and two timpani (Group 2); with violoncello and contrabass (Group 3). On Systems 2 and 3 we see only a single line containing the violoncello and contrabass. On System 4 we see two groups: Bassoon 1 and 2 (Group 1); and viola, violoncello, and contrabass (here independent of one another) (Group 2). What may seem complex in its graphical requirements is simple in its musical explanation: the violoncello and contrabass flourish in the first bars of System one heralds a change which consists of the introduction (by the same instruments) of the principal theme (the famous Ode to Joy) of the movement. After its ample exposition by this group (Systems 2 and 3), it is taken up by the violas (System 4) and subsequently by other instruments and voices. | ||

| + | |||

| + | ===Data Interchange between Domains and Schemes=== | ||

| + | The interchange of musical data is significantly more complex than that of text. Musical data is doubly multi-dimensional. That is, pitch and duration parameters describe a two-dimensional space. The structural and organizational issues described above also force programmers to be selective in their orientation. | ||

| + | |||

| + | Additionally, most music is [http://en.wikipedia.org/wiki/Polyphony polyphonic]: it consists of more than one voice sounding simultaneously. So taking all fundamental parameters into account, a musical score is an array of two (or more) dimensional arrays. | ||

| + | |||

| + | There is no default format for musical data (analagous, let us say, to [http://en.wikipedia.org/wiki/ASCII ASCII] for text in the Roman alphabet). This absence owes largely to the great range of musical styles and methods of production that exist throughout the world. No one scheme is favorable to all situations. Data interchange inevitably involves making sacrifices. In the world of text applications [http://en.wikipedia.org/wiki/Unicode Unicode] facilitates interchange between Roman and non-Roman character sets (Cyrillic, Arabic et al.) for alphabets that are phonetic. | ||

| + | |||

| + | ====MusicXML==== | ||

| + | Within the domain of musical notation [http://en.wikipedia.org/wiki/MusicXML MusicXML] is currently the most widely use scheme for data interchange, particularly for commercial software programs such as [http://en.wikipedia.org/wiki/Finale_(software) <i>Finale</i>] and [http://en.wikipedia.org/wiki/Sibelius_(software) <i>Sibelius</i>]. A particular strength of MusicXML is its ability to convert between part-based and score-based encodings. This owes to its having been modeled bilaterally on <i>MuseData</i>, for which the raw input is part-based, and the Humdrum <i>kern</i> format, which is score-based. | ||

| + | |||

| + | In 2012 the MusicXML specification became the property of MakeMusic and is now hosted at this [http://www.makemusic.com/musicxml/specification website]. Tutorials are available for several special repertories. | ||

| + | |||

| + | ====Audio interchange==== | ||

| + | Many schemes for audio interchange exist in the world of sound applications. Among them are these: | ||

| + | |||

| + | * [http://en.wikipedia.org/wiki/AIFF AIFF] has been closely associated since 1988 with Apple computers. | ||

| + | * [http://en.wikipedia.org/wiki/Resource_Interchange_File_Format RIFF] has been closely associated since 1991 with Microsoft software. | ||

| + | |||

| + | Both schemes are based on the concept of "chunked" data. In sound files <i>chunks</i> segregate data records according to their purpose. Some records contain header information or metadata; some contain machine-specific code; most define the content of the work. | ||

| + | |||

| + | * [http://en.wikipedia.org/wiki/MP3 MP3] files further a practical interest in processing audio files, which can be exorbitant in size. Compression techniques speed processing but may sacrifice some of the precision in incremental usage. | ||

| + | |||

| + | * [http://en.wikipedia.org/wiki/MPEG MPEG], another compression scheme, is concerned with the synchronization of audio and video compression. A large number of extensions adapt it for other uses. | ||

| + | |||

| + | ====The Music Encoding Initiative (MEI)==== | ||

| + | The [http://music-encoding.org/home Music Encoding Initiative] serves non-commercial users in encoding graphical details of original musical sources such that the content of the original materials can be represented as literally as possible for publication online and in print. As a predecessor of both [http://en.wikipedia.org/wiki/HTML HTML] and [http://en.wikipedia.org/wiki/XML XML], [http://en.wikipedia.org/wiki/Standard_Generalized_Markup_Language Standard Generalized Markup Language] can be viewed as the primogenitor of projects such as the Text Encoding Initiative ([http://en.wikipedia.org/wiki/Text_Encoding_Initiative TEI]) and [http://en.wikipedia.org/wiki/Music_Encoding_Initiative MEI]. MEI is an XML-based approach suited to the markup of both musical and partially musical materials (i.e., texts with interpolations of music). | ||

| + | |||

| + | [http://music-encoding.org/documentation Documentation and sample encodings] are available here. | ||

| + | |||

| + | ====Cross-domain interchange==== | ||

| + | Attempts have been made to develop cross-domain interchange schemes. The default "cross-domain" application is [http://en.wikipedia.org/wiki/MIDI MIDI], to which we devote attention above. Since, however, MIDI represents only five parameters of music, its use in this capacity generally filters out other parameters from the original format, which must then be re-encoded in the new one. MusicXML, which is based largely on MuseData, is increasingly used for interchange between notation formats. | ||

| + | |||

| + | ==Applications of Musical Information== | ||

==References== | ==References== | ||

| − | 1. Musical symbol | + | 1. [http://beyondmidi.ccarh.org/beyondmidi-600dpi.pdf <i>Beyond MIDI: The Handbook of Musical Codes</i>], ed. Eleanor Selfridge-Field. Cambridge, MA: The MIT Press, 1997. |

| + | |||

| + | 2. Musical symbol [http://en.wikipedia.org/wiki/List_of_musical_symbols list]. N.B. Symbols used in music notation are constantly increasing in response to changes in musical style. | ||

| + | |||

| + | 3. Repp, Bruno. [http://www.brainmusic.org/EducationalActivitiesFolder/Repp_Chopin1998.pdf "Variations on a Theme by Chopin: Relations between Perception and Production of Timing in Music"], <i>Journal of Experimental Psychology: Human Perception and Performance</i>, 24/3 (1998), 791-811. | ||

| − | + | 4. Steinbeck, Wolfram. <i>Struktur und Ähnlichkeit. Methoden automatisierter Melodienanalyse</i> (<i>Kieler Schriften zur Musikwissenschaft</i> 25). Habilitationsschrift. Kassel, 1982. | |

Latest revision as of 19:12, 27 August 2023

See also the Music 253/CS 275a Syllabus.

Contents

- 1 What is Musical Information?

- 2 Applications of Musical Information

- 3 Parameters, Domains, and Systems

- 4 Schemes of Representation

- 5 Basic Issues in Music Representation

- 6 Applications of Musical Information

- 7 References

What is Musical Information?

Musical information (also called musical informatics) is a body of information used to specify the content of a musical work. There is no single method of representing musical content. Many digital systems of musical information have evolved since the 1950s, when the earliest efforts to generate music by computer were made. In the present day several branches of musical informatics exist. These support applications concerned mainly with sound, mainly with graphical notation, or mainly with analysis.

Musical representation generally refers to a broader body of knowledge with a longer history, spanning both digital and non-digital methods of describing the nature and content of musical material. The syllables do-re-mi (identifying the first three notes of an ascending scale) can be said to represent the beginning of a scale. Unlike graphical notation, which indicates exact pitch, this representation scheme is moveable. It pertains to the first three notes of any ascending scale, irrespective of its pitch.

Applications of Musical Information

Apart from obvious uses in recognizing, editing, and printing, musical information supports a wide range of applications in certain areas of real and virtual performance, creative expression, and musical analysis. While it is impossible to treat any of these at length, some sample applications are shown here.

Parameters, Domains, and Systems

Parameters of Musical Information

Two kinds of information--pitch and duration--are pre-eminent, for without pitch there is no sound, but pitch without duration has no substance. Beyond those two arrays of parameters, others are used selectively to support specific application areas.

Pitch

Trained musicians develop a very refined sense of pitch. Systems for representing pitch span a wide range of levels of specificity. Simple discrimination between ascending and descending pitch movements meet the needs of many young children, while elaborate systems of microtonality exist in some cultures.

There are many graduated continua--diatonic, chromatic, and enharmonic scales--for describing pitch. Absolute measurements such as frequency can be used to describe pitch, but for the purposes of notation and analysis other nomenclature is used to relate a given pitch to its particular musical context.

Duration

Duration has contrasting features: how long a single note lasts is entirely relative to the rhythmic context in which it exists. Prior to the development of the Musical Instrumental Digital Interface (MIDI) in the mid-1980s the metronome was the only widely used tool to calibrate the pace of music (its tempo). MIDI provides a method of calibration that facilitates capturing very slight differences of the execution in order to "record" performance in a temporally precise way. In most schemes of music representation values are far less precise. Recent psychological studies have demonstrated that while human expectations of pitch are precise, a single piece of music accommodates widely discrepant executions of rhythm. People can be conditioned to perform music in a rote manner, with little variation from one performance to another, but deviation from a regular beat is normal.

Other Dimensions of Musical Information

Many other dimensions of musical information exist. Gestural information registers the things a performer may do to execute a work. These could include articulation marks for string instruments; finger numbers and pedal marks for piano playing; breath marks for singers and wind players; heel-toe indicators for organists, and so forth. Some attributes of music that are commonly discussed, such as accent, are implied by notation but are not actually present in the fabric of the musical work. They occur only in its execution.

Domains of Musical Information

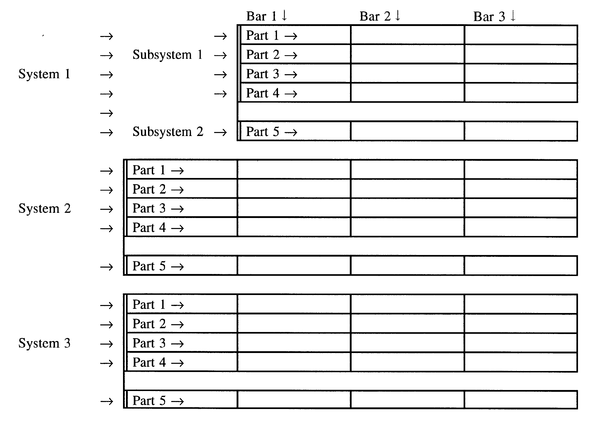

Applications of musical information (or data) are said to exist in various domains. These domains partially overlap in the information they contain, but each contains domain-specific features that cannot be said to belong to the work as a whole. The three most important domains are those of graphics (notation), sound, and that content not dependent on either (usually called the "logical" work). The range of parameters which are common to all three domains, though difficult to portray precisely, is much smaller than the composite list of all of them, as the illustration below attempts to show.

The Graphical (Notational) Domain

The notation domain requires extensive information about placement on a page or screen. Musical notation works very differently from text for two main reasons: (1) every note simultaneously represents an arbitrary number of parameters of sound and (2) most notated scores are polyphonic.

In contrast to scanned images of musical scores, encoded scores facilitate the analysis and re-display of these many parameters, singly or in any combination. Extracted parameters can also be recombined to create new works (a topic treated at Stanford in a separate course, Music 254/CS 275B).

The Sound Domain

The sound domain requires information about musical timbre. In the notation domain a dynamic change may be represented by a single symbol, but in the sound domain a dynamic change will affect the parameters of a series of notes. In relation to notated music sound makes phenomenal what is in the written context merely verbal (tempo, dynamics) or symbolic (pitch, duration). In its emphasis on the distinctios between verbal prescriptions and actual (sounding) phenomena, semiotics offers a ready-made intellectual framework for understanding music encoding in relation to musical sound.

The Logical Domain

The logical domain of music representation is somewhat elusive in that while it retains parameters required to fully represent a musical work, it shuns information that is exclusively concerned with sight or sound. Pitch and duration remain fundamental to musical identity, but whether eighth notes are beamed together or have separate stems is exclusively a concern of printing. If, however, beamed groups of notes are considered (in a particular context) to play an important role in some aspect of visual cognition, they may be considered conditionally relevant. The parameters and attributes encoded in a "logical" file are therefore negotiable and, from one case to the next, may seem ambiguous or contradictory.

Systems of Musical Information

Many parametric codes for music have been invented without an adequate consideration of their intended purpose and the consequent requirements. A systematic approach normally favors a top-down consideration of which domain(s) will be emphasized. Many parameters are notation-specific, while others are sound-specific.

Domain differences favor domain-specific approaches: it is more practical to develop and employ code that is task- or repertory-specific than to disentangle large numbers of variables addressing multiple domains in a "complete" representation system. However, if the purpose is to support interchange between domains (as is often the cases with music-information retrieval and music analysis) then planning now to link information across domains becomes very important.

Notation-oriented schemes

Notation programs favor the graphical domain because notated music is only comprehensible if the spatial position of every object is correct and if the visual relationships between object are precise. Particularly in Common Western Notation (CMN), there is an elaborate visual grammar. There are also national conventions and publisher-specific style requirements to consider. Here we concentrate on CMN but emphasize as the requirements of music change, the manner in which music is notated also changes. Non-common notations often require special software for rendering.

Notational information links poorly with sound files. It is optimized for rapid reading and for the quick comprehensive of performers. At an abstract level, the visual requirements of notation have little to do with sound. Beamed groups provide an apt example of the need to facilitate rapid comprehension. Beams mean nothing in sound. Barlines are similarly silent, but they enable users maintain a steady meter.

Sound-oriented schemes

Although notation describes meter and rhythm, it does so symbolically. In an ideal sense, no time need elapse for this explanation. In sound files, however, time is a fundamental variable. Time operates simultaneously on two (sometimes more) levels. Meter gives an underlying pulse which is never entirely metronomic in performance (Repp [3]). Whereas in a score, the intended meter can usually be determined from one global parameter, in a sound file it must be deduced. Then the object of interest becomes the deviation from this implied steadiness for every sounding pitch.

Apart from pitch and duration, other important qualities of sounding music are timbre, dynamics, and tempo. Timbre defines the quality of the sound produced. Many factors underlie differences in timbre. Dynamics refer to levels of volume. Tempo refers to the speed of the music. Relative change of both dynamics and tempo are both important in engaging a listener's attention.

Sound files are synchronized with notation files only with difficulty. This is mainly because what is precise is one domain is vague in the other and vice-versa.

Data Acquisition (Input)

For notation

Many systems for the acquisition of musical data exist. The two most popular forms of capture today are (1) from a musical keyboard (via MIDI) and (2) via optical recognition (scanning). Both provide incomplete data for notational applications: keyboard capture misses many features specific to notation (but not shared by sound--beams, slurs, ornaments, and so forth); optical recognition is an imperfect science that can fail to "see" or misinterpret arbitrary symbols. Commercial programs for optical recognition tend to focus of providing MIDI data rather than a full complement of notational features. The details of what may be missed, or misinterpreted by the software, vary with the program. In either of the above cases serious users must modify files by hand.

Hand-correction of automatically acquired materials can be very costly in terms of time. For some repertories complete encoding by hand may save time and produce a more professional result, but outcomes vary significantly with software, user familiarity with the program, and the quantity of detail beyond pitch and duration that is required.

Statistical measures give little guidance on the efficiency of various input methods. A claimed 90% accuracy rate means little if barlines are in the wrong place, clef changes have been ignored, text underlay is required, and so forth.

Visual prompts for data entry to a virtual keyboard may help novices come to grips with the complexity of musical data. Musicians are accustomed to grasping from conventional notation several features--pitch, duration, articulation--from one reading pass because a musical note combines information about these features in one symbol. However, computers cannot reliably decode these synthetic lumps of information.

For sound

MIDI is the preferred way to capture sound data to a file that can be processed for diverse purposes. MIDI provides a convenient way to store sound data for further use and for sharing. It is frequently used to generate notation and is useful for rough sketches of a work. Its capabilities for refined notation are limited, particularly by its inarticulate represent of pitch.

For logical information

Logical information is usually encoded by hand or acquired by data translation from another format. For simple repertories and short pieces logical scores may be acquired from MIDI data, but if the intended use is analytical, then verification of content is so important that manual data entry may be more efficient.

Data Output

All schemes of music representation are based on the assumption that users will wish to access and extract data. When output does not match a user's expectations it can be difficult to attribute a cause. Errors can result both from flawed or incomplete data and from inconsistencies or lapses in the processing software. However, all schemes of music representation are necessarily incomplete, because music itself is constantly in flux.

From notation software

The extraction of data sufficient to provide conventional music notation requires a considerable number of parameters. Hand correction is almost always necessary for scores of professional quality.

Lapses are greatest for repertories that do not entirely fall within the bounds of Common Western Notation (European-style music composed between c. 1650 and 1950) or which have an exceptionally large number of symbols per page (much of the music of the later nineteenth century, such as that of Verdi and Tchaikovsky). Even some popular repertories are difficult to reproduce (usually because of unconventional requirements) with complete accuracy.

From sound-generating software

The ear is a quicker judge than the eye but also a less forgiving one. Errors in sound files are usually conspicuous, but editing files can be difficult, but this depends mainly on the graphical user interface provided by the sound software.

From logical-data files

Logical-data files are difficult to verify for accuracy and completeness unless they can be channeled either to sound or notational output of some kind. This means that the results of analytical routines run on them can be flawed by the data itself, though the algorithms used in processing must be examined as well.

Schemes of Representation

Notation Codes

Schemes for representing music for notation and display range from lists of codes for the production of musical symbols such as CMN to extensible languages in which symbols are interrelated and may be prioritized in processing. Some notation codes are essentially printing languages in their own right but may lose any sense of musical logic in their assembly.

Some users judge notation codes by the aesthetic qualities of the output. Professional musicians tend to prefer notation that is properly spaced and in which the proportional sizes of objects clarify meaning and facilitate easy reading. For this reason notation software is heavily laden with spacing algorithms and provides the user with many utilities to altering automatic placement and size.

Musical notation is not an enclosed universe. In the West it has been in development for a millennium. In many parts of the Third World cultural values discourage the use of written music other than in clandestine circles of students of one particular musical practice. Contemporary composers constantly stretch the limits of Conventional Western Notation, guaranteeing that graphical notation will continue to evolve.

A fundamental variable of approaches to notation is that of the conceptual organization of a musical work, coupled with the order in which elements are presented. Scores can be imagined as consisting of parts, measures, notes, pages, sections, movements, and so forth. Only one of these can be taken as fundamental; other elements must be related to it.

Early representation systems encoded works page by page. More recent systems lay a score out on a horizontal scroll that can be segmented according to page layout instructions provided by the user.

Monophonic Codes: EsAC and Plaine and Easie Code

Two coding systems that have been unusually durable are the Essen Associative Code (EsAC) and Plaine and Easie. Each is tied to a long-term ongoing project. Both are designed for monophonic music (music for one voice only). Both have been translated into other formats, thus realizing greater potential than the originators imagined.

EsAC

The purpose of EsAC was to encode European folksongs in a compact format. EsAC has a fascinating pre-history, which is rooted in the widespread collections of folksong made in the nineteenth and early twentieth centuries, when German was spoken across a wide swatch of the Continent.

A (typescript) code was developed to "notate" each song according to a single set of rules. Some metadata (titles, locales) and musical parameters (meter, key) were noted. The script was necessarily based on ASCII characters. [The underlying code was used for typescript "transcriptions" in unedited collections in Central and Eastern Europe (Austria, Croatia, Poland, Slovenia, Serbia) and elsewhere.]

Adaptation of this material to mainframe computers was begun in the 1970s by the Deutsche Volkslied Archiv (DVA) in Freiburg im Breisgau (Germany). An pioneering work of musical analysis across the different repertories encoded was completed by Wolfram Steinbeck (1982). This inspired the late Helmut Schaffrath, an active member of the International Council for Traditional Music, to adapt some of the DVA materials to a minicomputer. Several of his students at the Essen Hochschule für Musik (later renamed the Folkwang Universität der Künste) wrote software to implement analysis routines commonly applied to folksongs. The subset became the Essen Folksong Collection; the code, correspondingly, became EsAC. Schaffrath's work, which took a separate direction from that of the DVA, was carried out between 1872 and his death (1994). The work was later continued by Ewa Dahlig-Turek at the EsAC data website.

The EsAC encoding system is easily understood within the context of its original purposes: it is designed for music which is monophonic. Although all the works have lyrics, the encoding system was not designed to accommodate lyrics. (Efforts to add them have disclosed certain limitations of the system.) The range of a human voice rarely exceeds three octaves, but given that the ideal tessitura varies from one person to another, the concept of a fixed key is of neglible importance. Pitch is therefore encoded according to a relative system accommodating a three-octave span [Table 2.]

A smallest duration, given in header (preliminary) information, is taken as the default value. The increments of longer note values are indicated by underline characters. Sample files are shown in thus handout of EsAC samples. Further details are given in Beyond MIDI, Ch. 24.

Plaine and Easie Code

The purpose of Plaine and Easie (P&E) code was to prepare virtual catalogues of musical manuscripts. It was designed to give an exact, complete description of every indication on a musical score. Although only incipits (beginning passages) are encoded, a P&E encoding might include a tempo and dynamics; grace notes, appoggiaturas, and other ornaments; marks for staccato and legato, and so forth. It will be based on the original, unedited music including clef signs rarely seen today and word spellings that may be obsolescent.

P&E has always been associated with the international online catalogue of (notated) music sources developed by RISM. The online project was originally focused on music manuscripts from the seventeenth and eighteenth centuries, but parallel projects initially committed to paper are scheduled to appear online. At this writing (January 2014) the online RISM database of manuscripts contains c. 875,000 entries, which come from more than 60 countries. This constellation of projects was begun in 1952. The database structure in which the musical data exists has more than a hundred text fields and is searchable in many ways. Links to the digitized manuscripts directly from the RISM listing are updated monthly.

Typesetting Codes: CMN, MusiXTeX, Lilypond, Guido, ABCplus